COMPUTEGPT

A Computational Chat Model for Numerical Problems

Pre-print on ArXiV, unpublished due to release of OpenAI's Code Interpreter

Team: Ryan Hardesty Lewis, Junfeng Jiao

INTRODUCTION

ComputeGPT, at its creation in January 2023, represented a transformative leap in solving numerical problems, combining the reasoning power of LLMs with the precision of executable code by being one of the first implementations of on-demand code execution from an LLM. Our novel architecture ensured unmatched accuracy and efficiency, addressing a wide range of numerical challenges with ease by fine-tuning prompts with exact Python methods for various tasks. Although this was groundbreaking at the time, a month later OpenAI announced Code Interpreter—mirroring much of the same functionality—and so we chose not to publish.

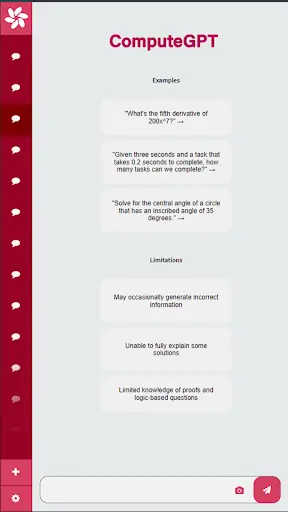

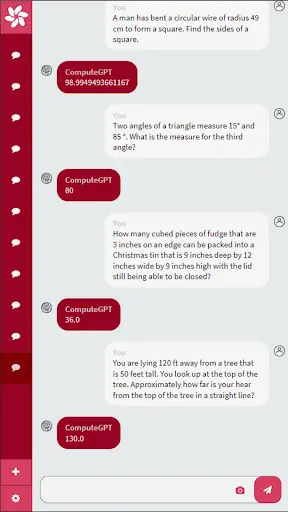

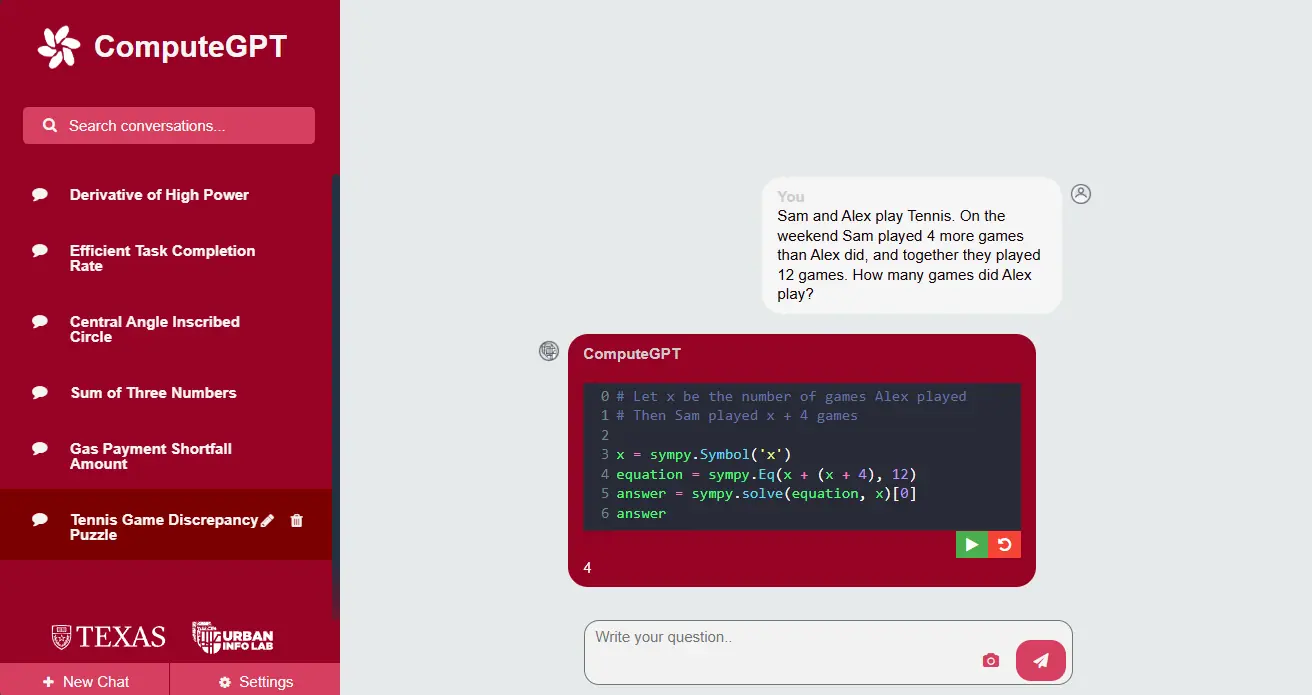

ComputeGPT addresses the known limitation of probabilistic next-token generation in large language models by having them write and run code, effectively turning complex word problems into straightforward calculations. The system allows users to see code in their browser while it executes, offering transparency and an interactive user experience. This approach blends LLM-based reasoning with direct code validation.

INITIAL CONCEPT

When ChatGPT 3.5 launched, LLMs still fell short in math and coding tasks. We recognized that an LLM wouldn’t feasibly store solutions to every problem, but could learn to “use tools” to compute. Our early prototypes exposed code generation flaws in ChatGPT and required extensive prompt fine-tuning. Over time, we developed specialized “ComputeGPT Eval” benchmarks to measure success, as existing benchmarks like HumanEval were tailored more toward Codex—OpenAI’s coding-focused model—rather than general-purpose LLMs. Ultimately, we proved that a carefully guided system could consistently generate and execute code to solve numeric and reasoning tasks.

TECHNOLOGY & IMPLEMENTATION

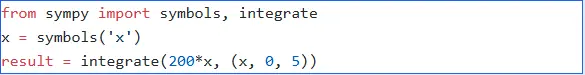

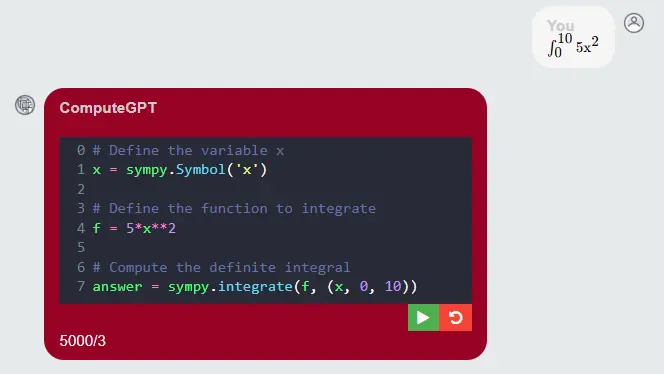

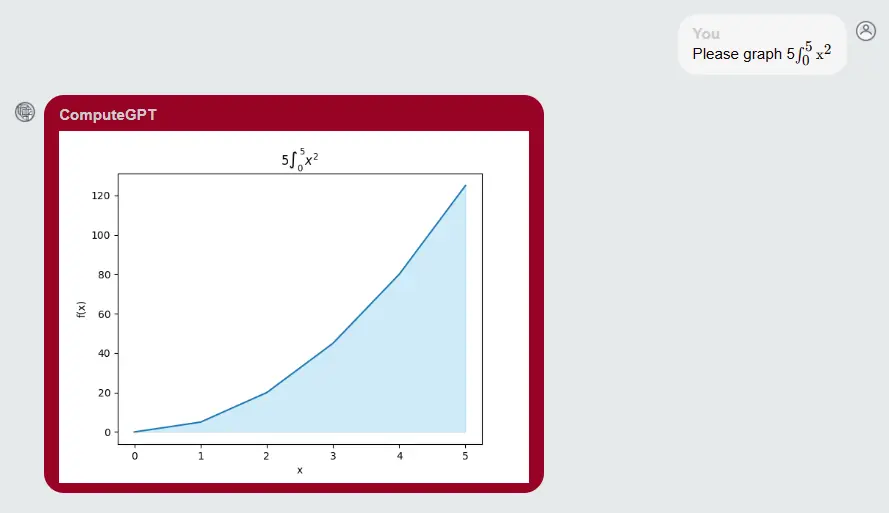

ComputeGPT refines user queries into prompts that produce concise Python scripts. For integrals or other symbolic tasks, it writes matching code and executes it. For instance:

All code runs client-side in Pyodide, a Python environment compiled to WebAssembly, eliminating server-side security risks. ComputeGPT then presents both result and source code, empowering users to verify and learn from each solution. Transparency and user clarity are paramount, especially for matrix operations or calculus problems where line-by-line outputs help clarify the solution’s basis.

This approach merges accuracy, security, and user understanding. By revealing the code and the reasoning that solves each query, we shatter that ignominious black box, and instead foster trust and deeper user engagement with the math behind the scenes.

RESULTS & IMPACT

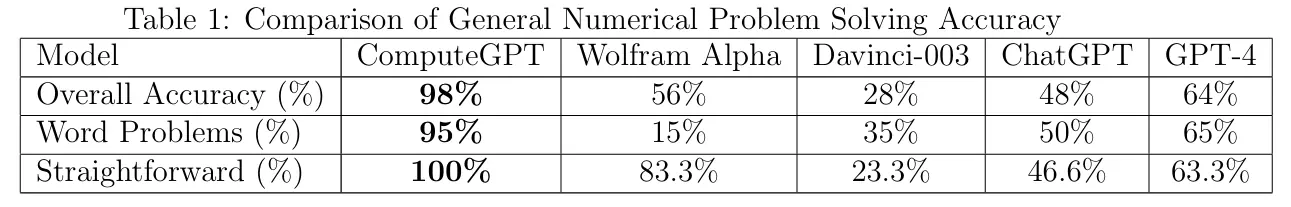

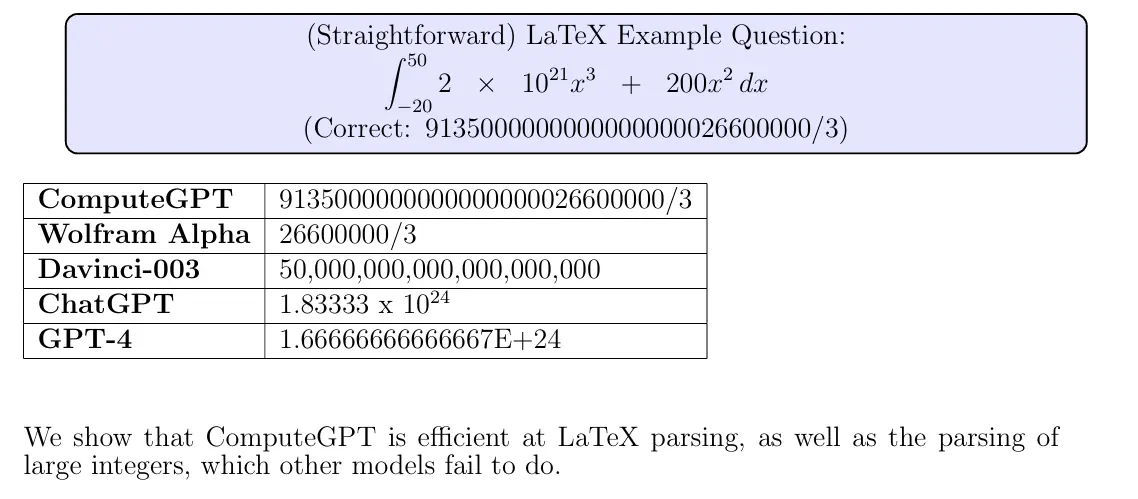

ComputeGPT has been rigorously tested against industry stalwarts like GPT-4, Bing Chat, Davinci-003, and Wolfram Alpha. Thanks to code generation and live execution, it resolves typical math tasks (e.g., integrals) with 100% accuracy, and even multi-step word problems are tackled with a 95% success rate. This outperforms commercial models that rely on purely generative text without verified computation.

Our evaluation suggests that the performance of ComputeGPT and OpenAI’s Code Interpreter is nearly identical, reflecting how on-demand code execution significantly augments an LLM’s reliability. By shifting complex computations from pure text generation to proven algorithms, we bypass fundamental constraints in large language models.

PROTOTYPE

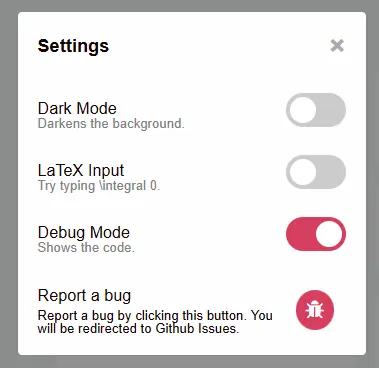

The latest ComputeGPT prototype combines a robust computational backend with a user-friendly interface. Accessible at https://computegpt.org, it supports mobile devices, accepts LaTeX inputs, displays charts and code, and allows users to tweak generated Python. By placing computations directly in the hands of the user, we deliver an experience that is both secure and thoroughly transparent.

ACKNOWLEDGEMENTS

We want to thank Wolfram Alpha, OpenAI, and Bing for access to their models during evaluation.